Features

Architecture

7,211 views

Technical designs and discussions about cloud-based systems.

Software

5,335 views

Articles and diagrams on writing code and solving technical problems.

Management

8,170 views

Running a company and working with teams of engineers to deliver quickly.

Creativity

7,620 views

Videos and music, graphic design, original and AI-generated art.

Architecture

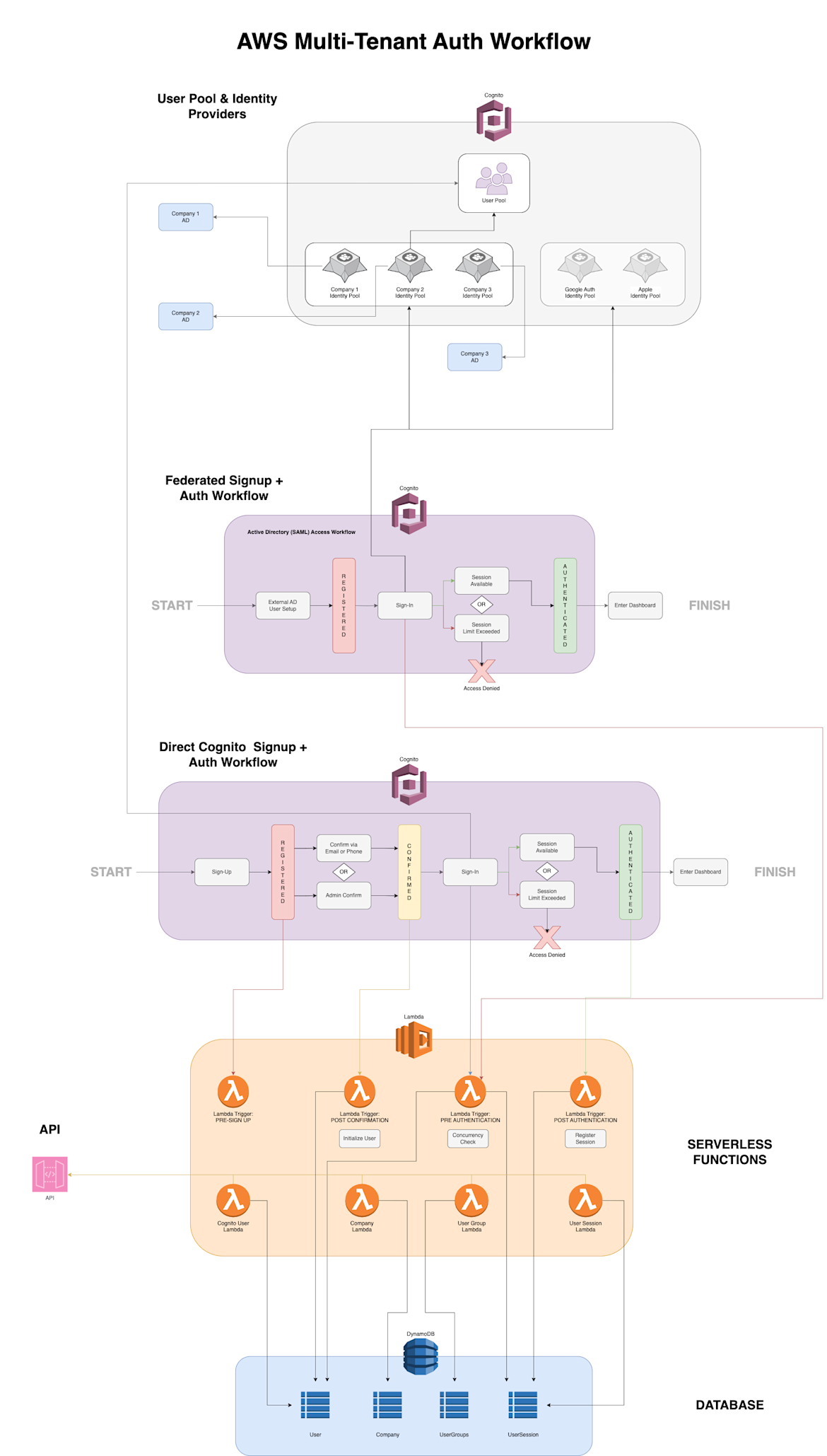

Scalable AWS Multi-Tenant Authentication: A Comprehensive Guide for Engineers

7,160 views

AWS multi-tenant auth system using Cognito, Lambda, and DynamoDB. Supports federated and direct login, custom workflows, and scalable serverless backe...

AWS AppSync Explained

6,860 views

A comprehensive dive into AWS AppSync with examples of different features and query designs. Learn how to use Lambda, DynamoDB, and HTTP data sources ...

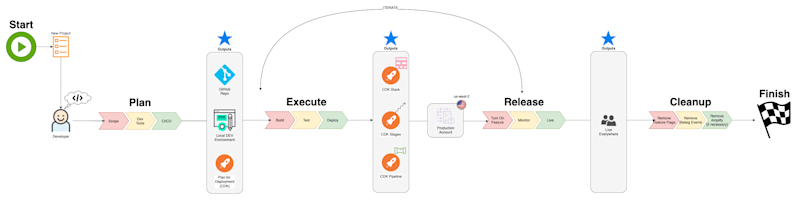

Real World Observations on CI/CD

7,375 views

Describes the transformation that happens in software development teams when CI/CD is embraced, developers own code through to production, and releasi...

Latest Videos

Johnny Cash - Big River

More Videos

Software Development

Game Development: 48 Hours to Make a Web-Based Game

9,476 views

Claude and I create a playable game from my CV where you can collect technical skills and follow the road of my professional career. Here I document t...

Electron: A New Tool for Cross-Platform App Development

5,616 views

Source code for an Electron Text-to-Speech app using AWS Polly is presented and discussed in detail. The Electron framework is discussed in detail in ...

Running Stable Diffusion in AWS

9,324 views

Describes a natural evolution when creating videos with Stable Diffusion Deforum whereby self-hosting and managing compute resources with robust graph...

AWS AppSync Explained

6,860 views

A comprehensive dive into AWS AppSync with examples of different features and query designs. Learn how to use Lambda, DynamoDB, and HTTP data sources ...

All Posts

Electron: A New Tool for Cross-Platform App Development

5,616 views

Source code for an Electron Text-to-Speech app using AWS Polly is presented and discussed in detail. The Electron framework is discussed in detail in ...

Running Stable Diffusion in AWS

9,324 views

Describes a natural evolution when creating videos with Stable Diffusion Deforum whereby self-hosting and managing compute resources with robust graph...

Soliciting Jobs for My AI Voice

5,430 views

I apply for a narration job using a demo created using ChatGPT and my synthesized voice. Let's see if I can get a job through a video of me saying wor...

24 Hours of Watch Time

9,081 views

I discuss creating and testing YouTube Shorts and the first video to pass 24 hours of watch time. I reflect on analytics and the process of creating v...

AWS AppSync Explained

6,860 views

A comprehensive dive into AWS AppSync with examples of different features and query designs. Learn how to use Lambda, DynamoDB, and HTTP data sources ...

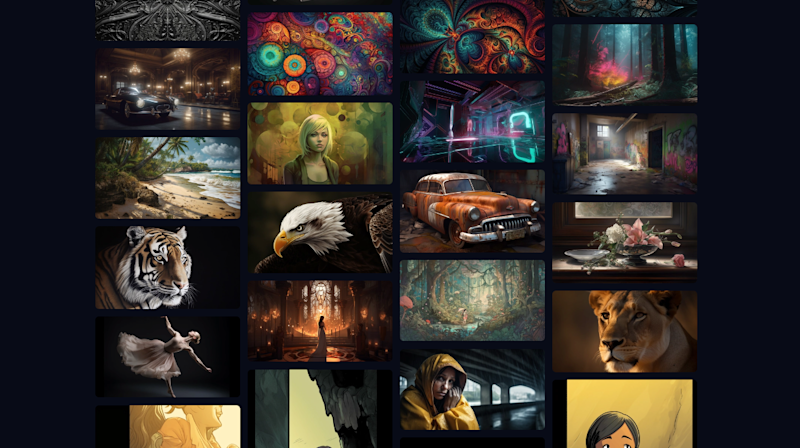

My Midjourney Profile

7,959 views

Here's a link to my Midjourney portfolio. You can check out all the images I'm creating for use on this site and my YouTube channels.

Generative AI Art Links

9,199 views

A collection of links to begin generating AI art both in the cloud for free and locally on your computer. Follow along with the links I used to go fro...

Real World Observations on CI/CD

7,375 views

Describes the transformation that happens in software development teams when CI/CD is embraced, developers own code through to production, and releasi...

Synthesizing My Voice

8,483 views

I use ElevenLabs Speech Synthesis tool to synthesize my voice in minutes. Now I have a vocal clone I use to read my articles and say whatever I don't ...

Deforum YouTube Shorts 6

5,174 views

The Wu Tang Clan, AC/DC, and Jay Z show up in 3 more YouTube shorts created with Stable Diffusion Deforum, the Rev Animated model, and 2D/3D motion ef...

Deforum YouTube Shorts 5

5,282 views

I continue to post YouTube Shorts using Stable Diffusion Deforum. Here I set them against Pink Floyd, Drake, Jay Z and Kanye West. The channel is gain...

Deforum YouTube Shorts 4

5,607 views

Today I created 3 more videos with Deforum in RunDiffusion. I used the RevAnimated model, DPM++ SDE Karras Sampler and 3D motion effects to once again...

Deforum YouTube Shorts 3

9,858 views

More videos created with Deforum, Stable Diffusion 1.5 and RevAnimated 1.2.2 models, Euler A and DPM++ SDE Karras samplers, and both 2D and 3D oscilla...

Deforum YouTube Shorts 1

5,262 views

I use Stable Diffusion Deforum, Run Diffusion, and ChatGPT to generate YouTube shorts with incredible visuals and licensed music.

Deforum YouTube Shorts 2

6,840 views

I continue using Stable Diffusion Deforum with the RevAnimated model. Here are a few videos of ocean animals, Valkyries, and Formula 1.

Swapping Faces in Midjourney

5,765 views

I use the InsightFace analysis library bot in Midjourney to add my face to any image I generate.

Running Stable Diffusion Locally

8,687 views

Today I installed Stable Diffusion locally, launched the web UI, and used a model I trained previously on my own face to generate pictures of me in di...

Stably Diffusing Myself

9,266 views

I use Dreambooth, Google Colab, and Hugging Face to train Stable Diffusion on my face so I can generate pictures of myself in different worlds and sty...

Supercars Soundtrack

7,921 views

Here is the soundtrack I created for the Supercars video I posted to my children's YouTube channel. I did everything in single takes. So I just came u...

YouTube Shorts with Licensed Music

5,673 views

Here are some YouTube Shorts I've created the past few weeks that utilize licensed, popular music available when uploading a Short from your phone.

Kids Talk AI YouTube Channel

5,702 views

I created a YouTube channel on artificial intelligence that includes facts, fears and thoughts on the future presented by an AI child's voice. It is i...

Presenting Jay-Z with Pictory

9,051 views

I use ChatGPT and Pictory to create a video with quotes and lyrics from Jay Z. I'm able to finish the 4-minute video in 40 minutes. Check out the vide...

Some Wisdom from Jiddu Krishnamurti

8,229 views

I've started a YouTube channel called Rise and Shine Quotes that aims to resurrect the words of forgotten thinkers and present them in digestible vide...

Using Pictory to Present McLuhan

7,732 views

I use Pictory AI to create a four-minute video in under an hour. Now I can focus on testing different niches for my videos more quickly with the help ...

Sci-Fi Art with DALL-E

8,886 views

Here are some sci-fi themed outputs from DALL-E. With the right prompt, it can produce some very interesting images. And until Midjourney is available...

Midjourney: Banners and Landscapes

9,250 views

I used Midjourney to create background images, YouTube banners, and landscapes for videos, channels and games. I'm learning about animation and creati...

Supercars Video

6,497 views

I used Midjourney to create images of Supercars in an anime vaporwave style for a children's video. I wanted to see the cars at night in the rain stre...

ABCs Video

8,792 views

I used Midjourney to create some interesting mashups of letter and word for a children's video on the ABCs. Check out the video and some of the pictur...

Animals In Clothes Video

5,321 views

Here are some of the raw DALL-E images that became assets in my video "Animals In Clothes". I was trying to generate old-looking paintings in the styl...

Emergency Vehicles Video

6,599 views

Here is a video I made recently and the raw Midjourney images I used as assets. I was going for a Pixar Cars style because my son is currently obsesse...

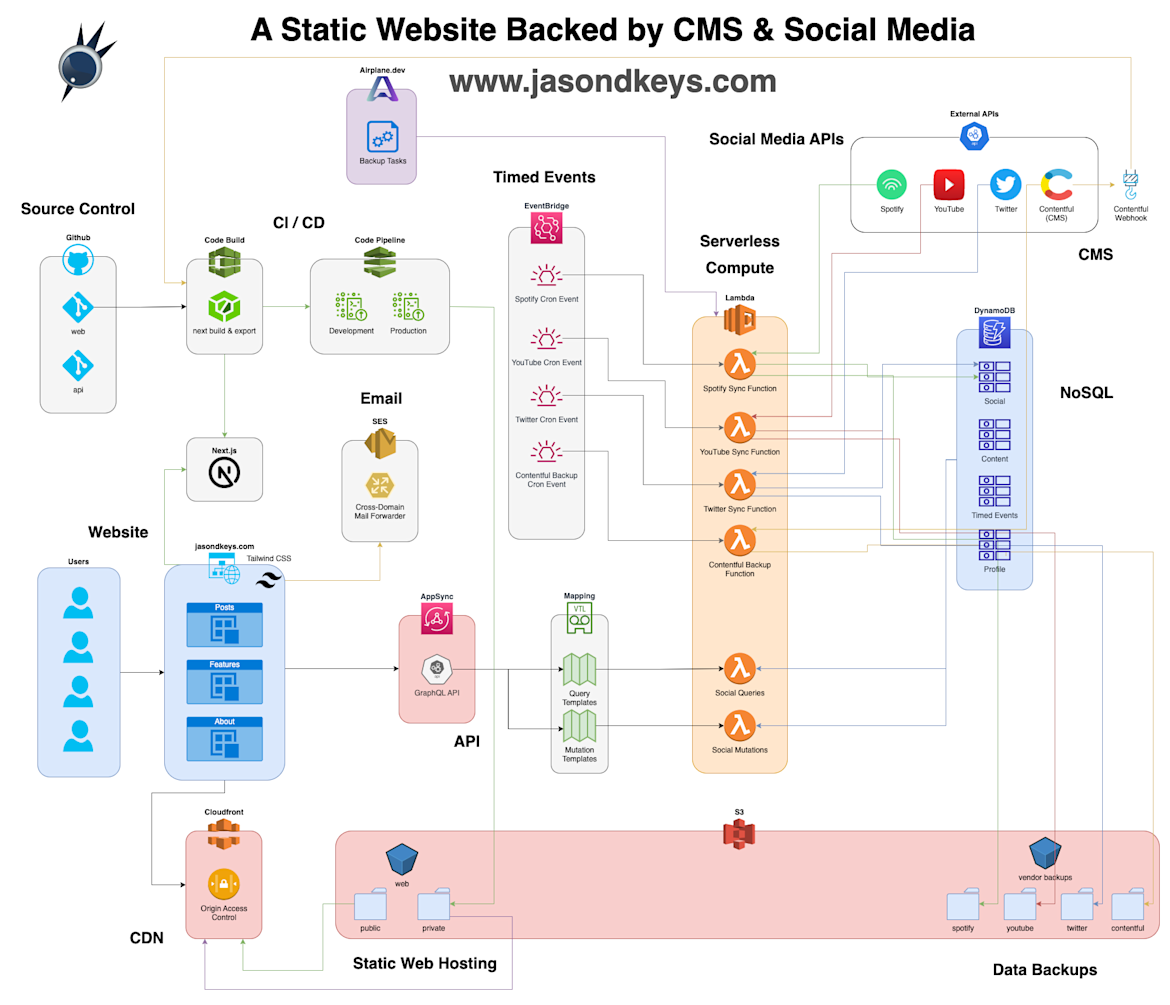

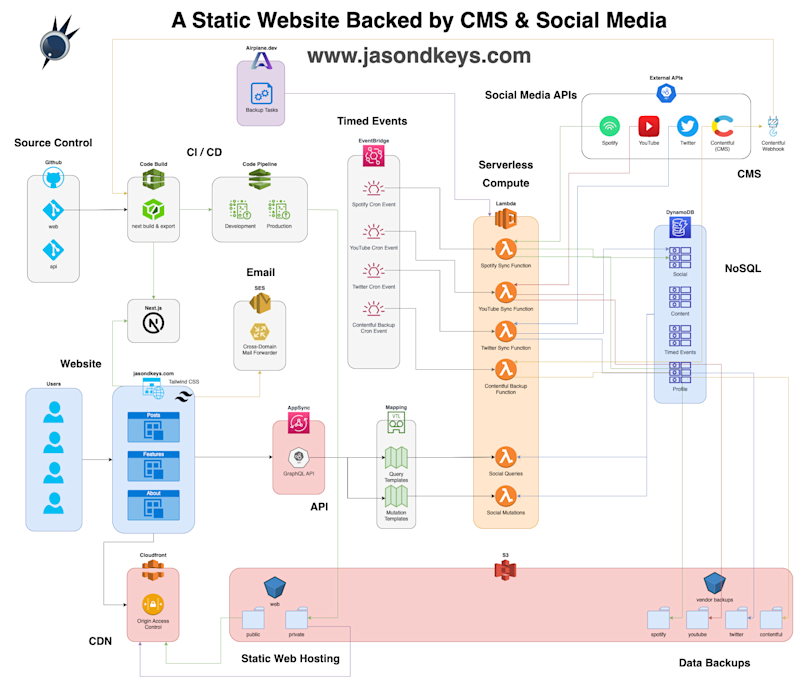

A Dynamically Static Website

6,368 views

Check out how this website works, the technology and tools that come together to make a static website come alive, and how it costs nothing to run.

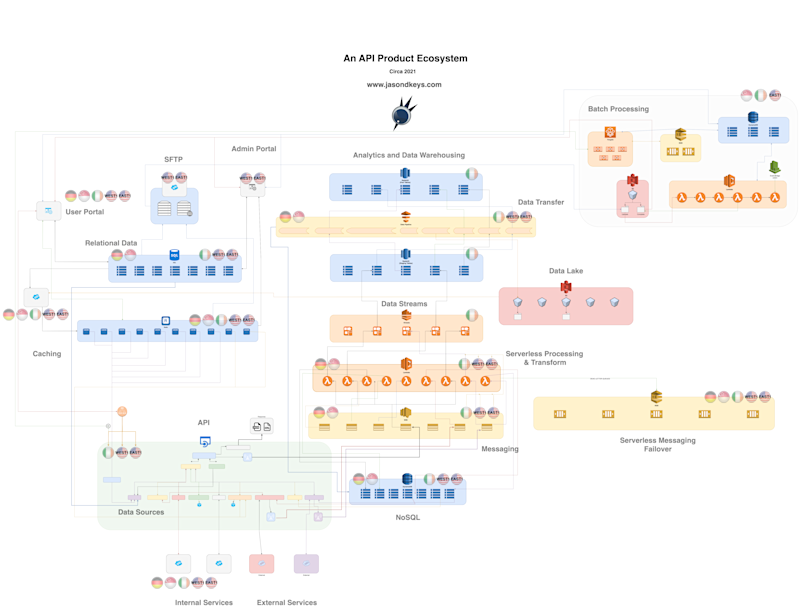

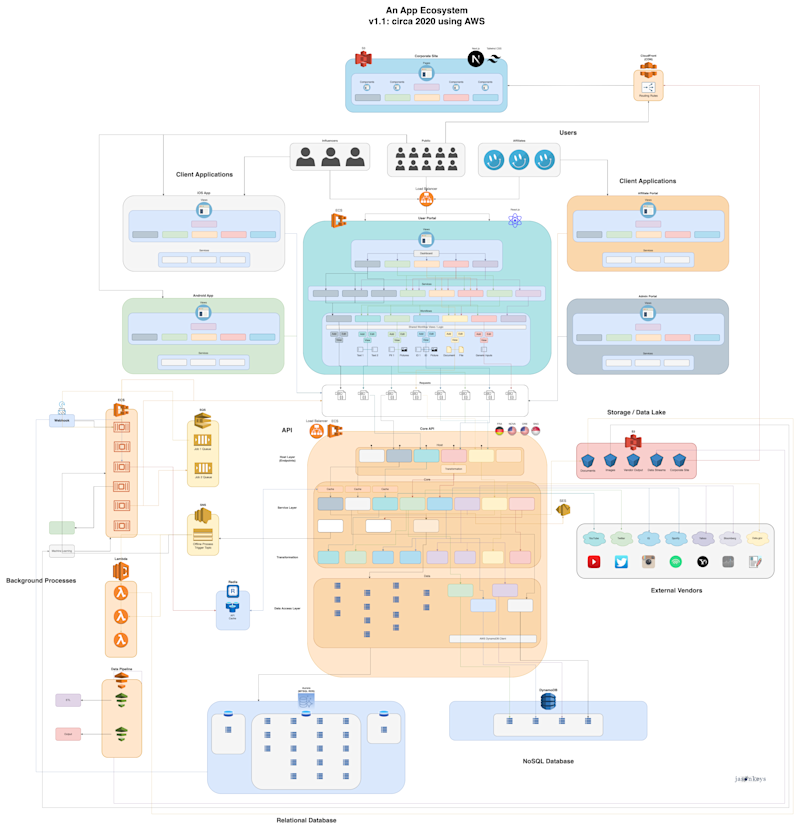

The Architecture of a Robust API

6,630 views

A technical picture that shows how a large API product may look in the AWS ecosystem, its web of connections and the high-level concerns in each area ...

Code Releases and Competitive Advantage

9,458 views

Explores the changes CI/CD brings to company with an economic lens, seeing how the frequency of code releases represents a competitive advantage for t...

Outsourcing Web Development to AI

5,819 views

ChatGPT plays the role of teacher and junior developer to me as I build user interfaces and regain my sanity.

Learning the Developer Mindset

7,757 views

Discusses how software developers must continually reinvent themselves and learn new tools to stay relevant.

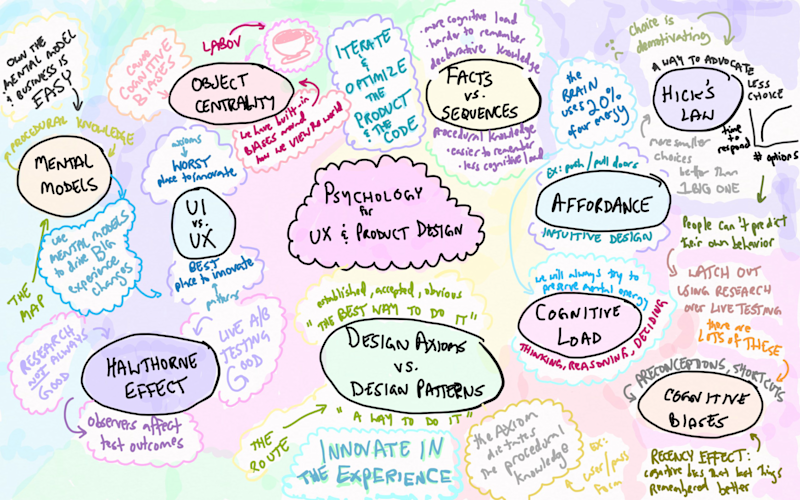

Psychology for UX & Product Design

7,466 views

Summary notes of an online workshop called Psychology for UX and Product Design where I learned about cognitive load, affordance and Hick's Law.

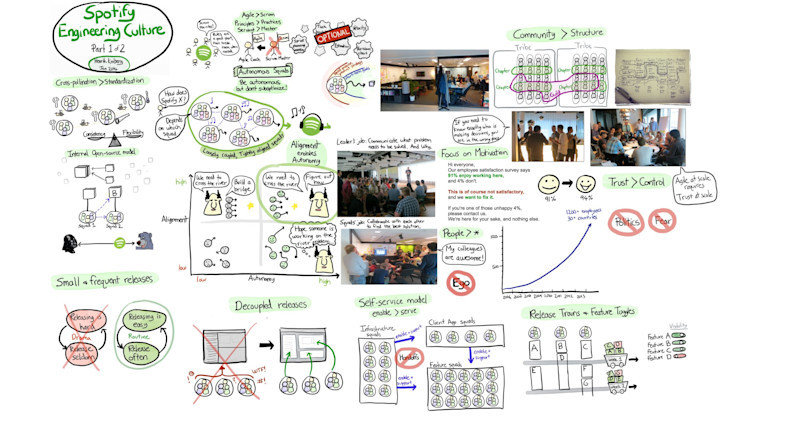

How I Make Technical Diagrams

5,849 views

Discusses how I collaborate on requirements, sketch out solutions, and produce beautiful technical diagrams.

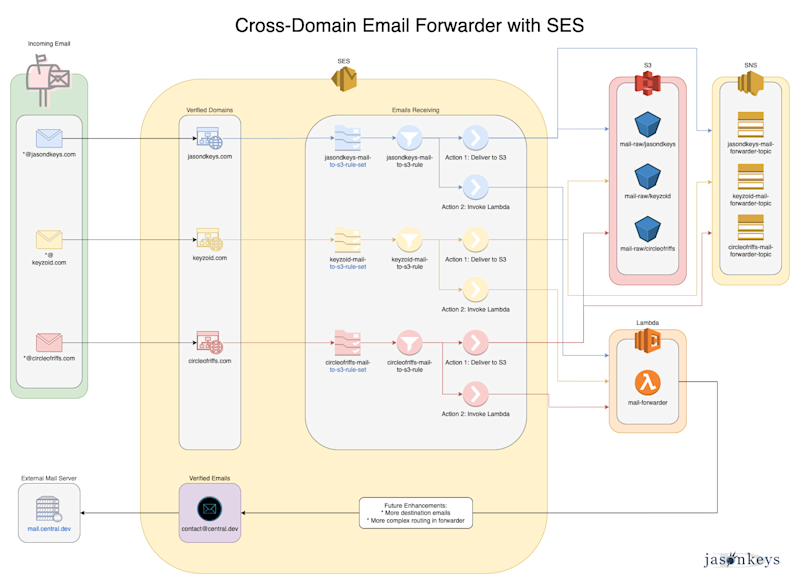

Implementing a Cross-Domain Email Forwarder with SES

5,437 views

Using AWS SES to forward disposable cross-domain email addresses to a shared third-party mail server.

A Startup Story: How Dev Teams Take Control

5,557 views

How an engineering team takes control in a startup by controlling the speed and integrity of the release process.

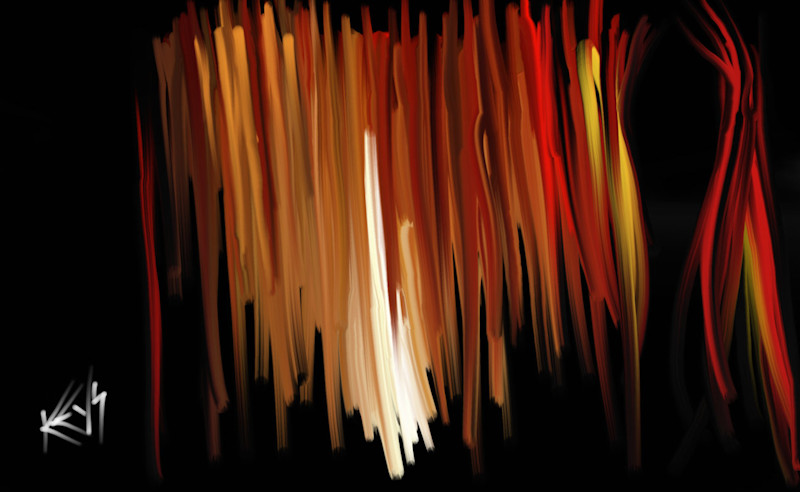

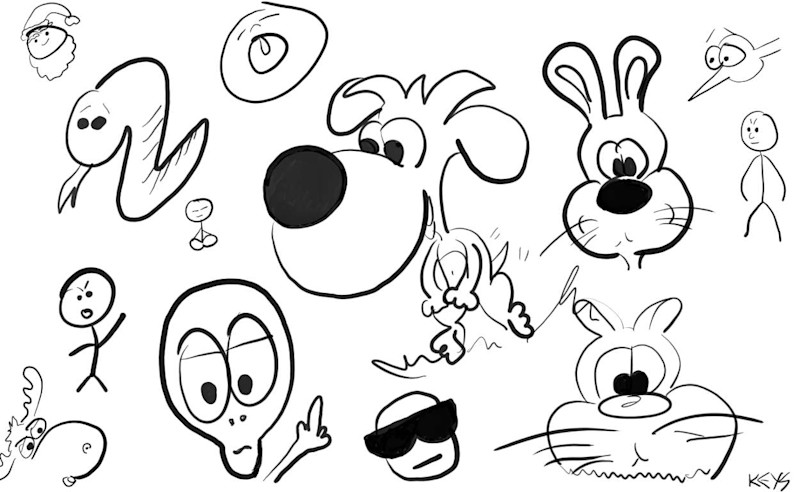

Cartoon Poses and Cameos

8,053 views

I've always loved drawing cartoons. My school notebooks growing up had every inch covered with them. I still draw them all the time. Sometimes on a ta...

Quotes

"In solving problems, technology creates new problems, and we seem to have to keep running faster and faster to stay where we are."